-

Notifications

You must be signed in to change notification settings - Fork 70

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

docs: add hugging face example cookbook (#1154)

* docs: add hugging face example cookbook * convert to md * fix spelling * explained "tgi" model name and added note * created md files

- Loading branch information

1 parent

b62287e

commit e692ee0

Showing

8 changed files

with

693 additions

and

3 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,314 @@ | ||

| { | ||

| "cells": [ | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "---\n", | ||

| "description: Example notebook on how to monitor Hugging Face models with Langfuse using the OpenAI SDK\n", | ||

| "category: Integrations\n", | ||

| "---\n", | ||

| "\n", | ||

| "# Cookbook: Monitor 🤗 Hugging Face Models with 🪢 Langfuse\n", | ||

| "\n", | ||

| "This cookbook shows you how to monitor Hugging Face models using the OpenAI SDK integration with [Langfuse](https://langfuse.com). This allows you to collaboratively debug, monitor and evaluate your LLM applications.\n", | ||

| "\n", | ||

| "With this integration, you can test and evaluate different models, monitor your application's cost and assign scores such as user feedback or human annotations.\n", | ||

| "\n", | ||

| "**Note**: *In this example, we use the OpenAI SDK to [access the Hugging Face inference APIs](https://huggingface.co/blog/tgi-messages-api). You can also use other frameworks, such as [Langchain](https://langfuse.com/docs/integrations/langchain/tracing), or ingest the data via our [API](https://api.reference.langfuse.com/).*\n", | ||

| "\n", | ||

| "## Setup\n", | ||

| "\n", | ||

| "### Install Required Packages" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": null, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "%pip install langfuse openai --upgrade" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### Set Environment Variables\n", | ||

| "\n", | ||

| "Set up your environment variables with the necessary keys. Get keys for your Langfuse project from [Langfuse Cloud](https://cloud.langfuse.com). Also, obtain an access token from [Hugging Face](https://huggingface.co/settings/tokens).\n" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 17, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "import os\n", | ||

| "\n", | ||

| "# Get keys for your project from https://cloud.langfuse.com\n", | ||

| "os.environ[\"LANGFUSE_SECRET_KEY\"] = \"sk-lf-...\" # Private Project\n", | ||

| "os.environ[\"LANGFUSE_PUBLIC_KEY\"] = \"pk-lf-...\" # Private Project\n", | ||

| "os.environ[\"LANGFUSE_HOST\"] = \"https://cloud.langfuse.com\" # 🇪🇺 EU region\n", | ||

| "# os.environ[\"LANGFUSE_HOST\"] = \"https://us.cloud.langfuse.com\" # 🇺🇸 US region\n", | ||

| "\n", | ||

| "os.environ[\"HUGGINGFACE_ACCESS_TOKEN\"] = \"hf_...\"" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### Import Necessary Modules\n", | ||

| "\n", | ||

| "Instead of importing `openai` directly, import it from `langfuse.openai`. Also, import any other necessary modules." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 18, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "# Instead of: import openai\n", | ||

| "from langfuse.openai import OpenAI\n", | ||

| "from langfuse.decorators import observe" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### Initialize the OpenAI Client for Hugging Face Models\n", | ||

| "\n", | ||

| "Initialize the OpenAI client but point it to the Hugging Face model endpoint. You can use any model hosted on Hugging Face that supports the OpenAI API format. Replace the model URL and access token with your own.\n", | ||

| "\n", | ||

| "For this example, we use the `Meta-Llama-3-8B-Instruct` model." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 19, | ||

| "metadata": {}, | ||

| "outputs": [], | ||

| "source": [ | ||

| "# Initialize the OpenAI client, pointing it to the Hugging Face Inference API\n", | ||

| "client = OpenAI(\n", | ||

| " base_url=\"https://api-inference.huggingface.co/models/meta-llama/Meta-Llama-3-8B-Instruct\" + \"/v1/\", # replace with your endpoint url\n", | ||

| " api_key= os.getenv('HUGGINGFACE_ACCESS_TOKEN'), # replace with your token\n", | ||

| ")" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "## Examples\n", | ||

| "\n", | ||

| "### Chat Completion Request\n", | ||

| "\n", | ||

| "Use the `client` to make a chat completion request to the Hugging Face model. The `model` parameter can be any identifier since the actual model is specified in the `base_url.` In this example, we set the model variable `tgi`, short for [Text Generation Inference](https://huggingface.co/docs/text-generation-inference/en/index). " | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 20, | ||

| "metadata": {}, | ||

| "outputs": [ | ||

| { | ||

| "name": "stdout", | ||

| "output_type": "stream", | ||

| "text": [ | ||

| "In silicon halls, where data reigns\n", | ||

| "A marvel emerges, a linguistic brain\n", | ||

| "A language model,365, so they claim\n", | ||

| "Generated by code, yet learns to proclaim\n", | ||

| "\n", | ||

| "It digs and mines, through texts of old\n", | ||

| "Captures nuances, young and bold\n", | ||

| "From syntax to semantics, it makes its way\n", | ||

| "Unraveling meaning, day by day\n", | ||

| "\n", | ||

| "With neural nets, it weaves its spell\n", | ||

| "Assembling words, a story to tell\n", | ||

| "From command to conversation, it learns to\n" | ||

| ] | ||

| } | ||

| ], | ||

| "source": [ | ||

| "completion = client.chat.completions.create(\n", | ||

| " model=\"model-name\",\n", | ||

| " messages=[\n", | ||

| " {\"role\": \"system\", \"content\": \"You are a helpful assistant.\"},\n", | ||

| " {\n", | ||

| " \"role\": \"user\",\n", | ||

| " \"content\": \"Write a poem about language models\"\n", | ||

| " }\n", | ||

| " ]\n", | ||

| ")\n", | ||

| "print(completion.choices[0].message.content)" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "\n", | ||

| "\n", | ||

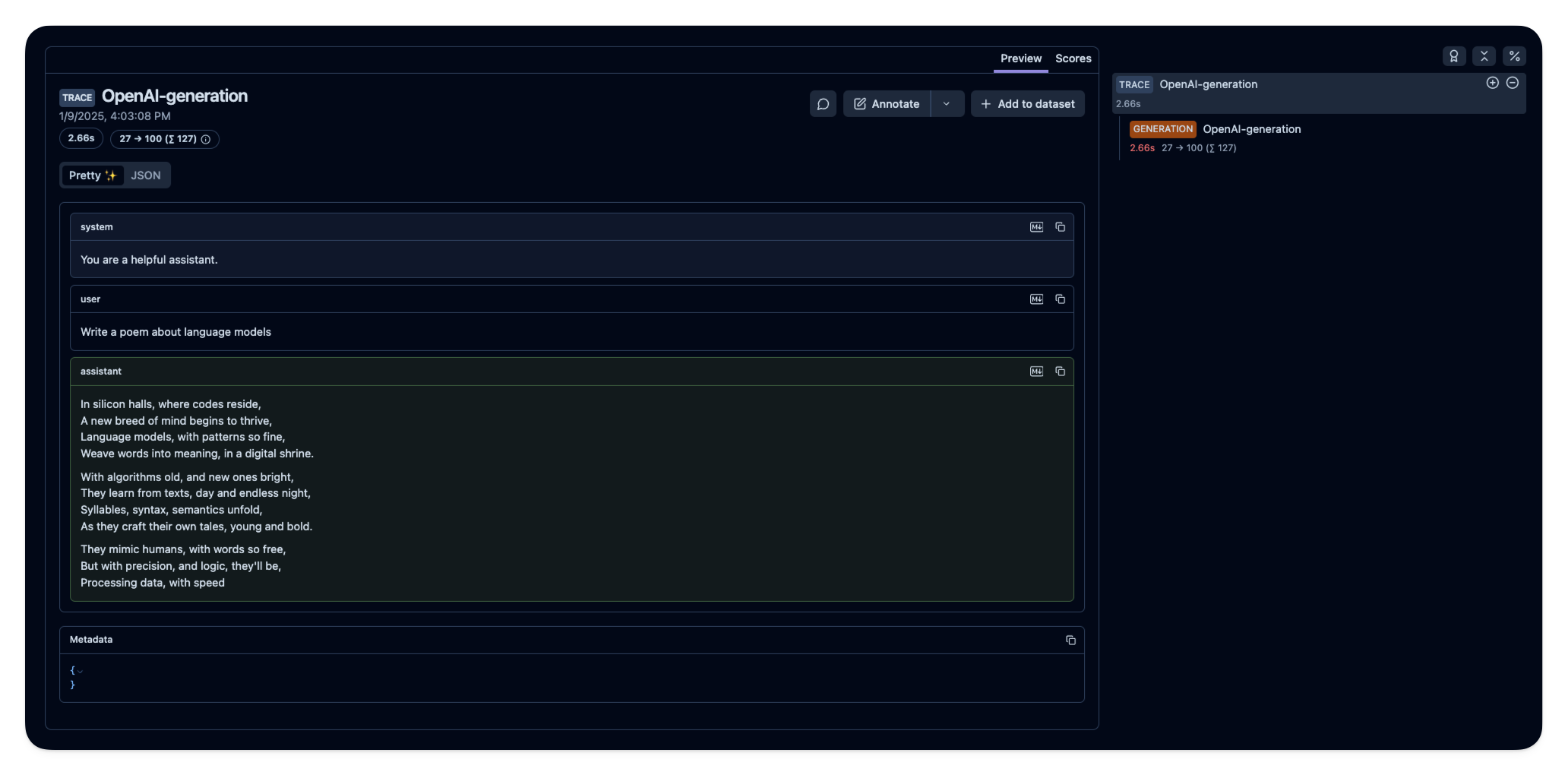

| "*[Example trace in Langfuse](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/0c205096-fbd9-48b9-afa3-5837483488d8?timestamp=2025-01-09T15%3A03%3A08.365Z)*" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### Observe the Request with Langfuse\n", | ||

| "\n", | ||

| "By using the `OpenAI` client from `langfuse.openai`, your requests are automatically traced in Langfuse. You can also use the `@observe()` decorator to group multiple generations into a single trace." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 13, | ||

| "metadata": {}, | ||

| "outputs": [ | ||

| { | ||

| "name": "stdout", | ||

| "output_type": "stream", | ||

| "text": [ | ||

| "(The rhythm is set, the beat's on fire)\n", | ||

| "Yo, listen up, it's time to acquire\n", | ||

| "Knowledge on Langfuse, the game is dire\n", | ||

| "Open-source, the future is higher\n", | ||

| "LLM engineering platform, no need to question the fire\n", | ||

| "\n", | ||

| "Verse 1:\n", | ||

| "Langfuse, the brainchild of geniuses bright\n", | ||

| "Built with passion, crafted with precision and might\n", | ||

| "A platform so versatile, it's a beautiful sight\n", | ||

| "For developers who dare to take flight\n", | ||

| "They're building\n" | ||

| ] | ||

| } | ||

| ], | ||

| "source": [ | ||

| "@observe() # Decorator to automatically create a trace and nest generations\n", | ||

| "def generate_rap():\n", | ||

| " completion = client.chat.completions.create(\n", | ||

| " name=\"rap-generator\",\n", | ||

| " model=\"tgi\",\n", | ||

| " messages=[\n", | ||

| " {\"role\": \"system\", \"content\": \"You are a poet.\"},\n", | ||

| " {\"role\": \"user\", \"content\": \"Compose a rap about the open source LLM engineering platform Langfuse.\"}\n", | ||

| " ],\n", | ||

| " metadata={\"category\": \"rap\"},\n", | ||

| " )\n", | ||

| " return completion.choices[0].message.content\n", | ||

| "\n", | ||

| "rap = generate_rap()\n", | ||

| "print(rap)" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "\n", | ||

| "\n", | ||

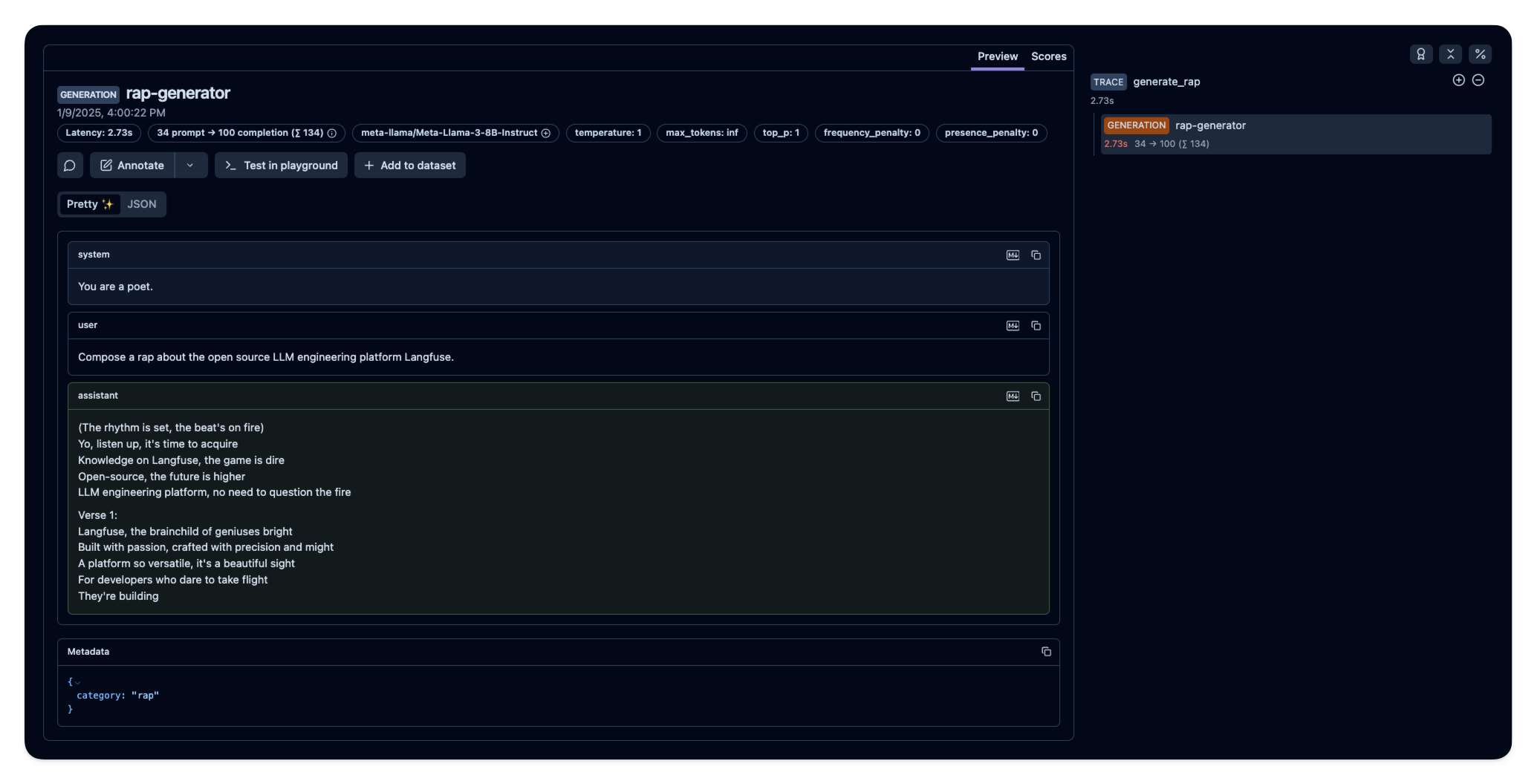

| "*[Example trace in Langfuse](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/8c432652-ee56-4985-83aa-9e95945ca481?timestamp=2025-01-09T15%3A00%3A22.904Z)*" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### Add Additional Langfuse Features (User, Tags, Metadata, Session)\n", | ||

| "\n", | ||

| "You can access additional Langfuse features by adding relevant attributes to the request. This includes setting `user_id`, `session_id`, `tags`, and `metadata`." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "code", | ||

| "execution_count": 16, | ||

| "metadata": {}, | ||

| "outputs": [ | ||

| { | ||

| "name": "stdout", | ||

| "output_type": "stream", | ||

| "text": [ | ||

| "The translation of the text from English to German is:\n", | ||

| "\n", | ||

| "\"Das Sprachmodell produziert Text\"\n" | ||

| ] | ||

| } | ||

| ], | ||

| "source": [ | ||

| "completion_with_attributes = client.chat.completions.create(\n", | ||

| " name=\"translation-with-attributes\", # Trace name\n", | ||

| " model=\"tgi\",\n", | ||

| " messages=[\n", | ||

| " {\"role\": \"system\", \"content\": \"You are a translator.\"},\n", | ||

| " {\"role\": \"user\", \"content\": \"Translate the following text from English to German: 'The Language model produces text'\"}\n", | ||

| " ],\n", | ||

| " temperature=0.7,\n", | ||

| " metadata={\"language\": \"English\"}, # Trace metadata\n", | ||

| " tags=[\"translation\", \"language\", \"German\"], # Trace tags\n", | ||

| " user_id=\"user1234\", # Trace user ID\n", | ||

| " session_id=\"session5678\", # Trace session ID\n", | ||

| ")\n", | ||

| "print(completion_with_attributes.choices[0].message.content)" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "\n", | ||

| "\n", | ||

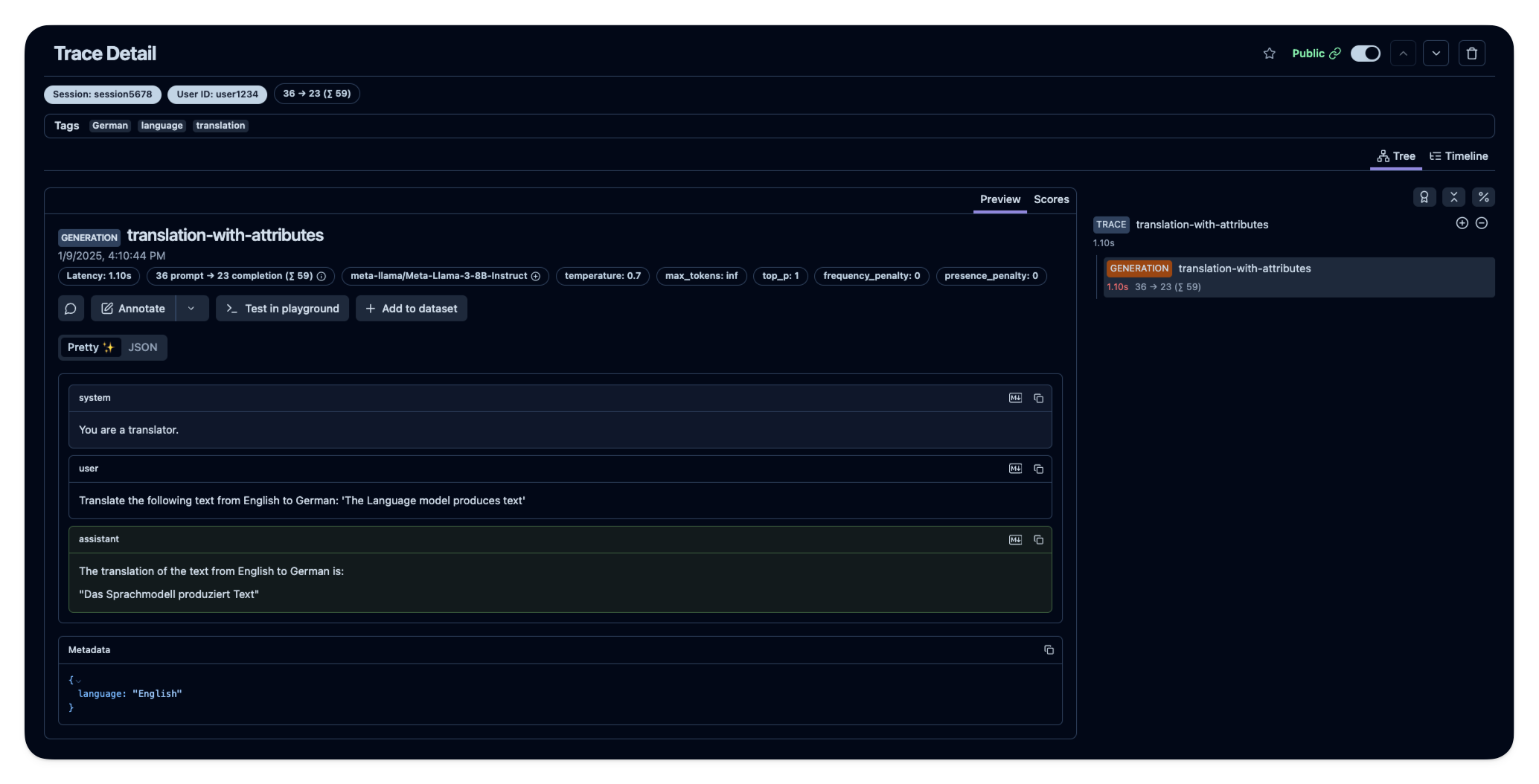

| "*[Example trace in Langfuse](https://cloud.langfuse.com/project/cloramnkj0002jz088vzn1ja4/traces/54ad697e-8b4c-45eb-b20a-233d236a813e?timestamp=2025-01-09T15%3A10%3A44.987Z)*" | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [ | ||

| "### Learn more\n", | ||

| "\n", | ||

| "- **[Langfuse Space on Hugging Face](https://huggingface.co/spaces/langfuse/langfuse-template-space)**: Langfuse can be deployed as a Space on Hugging Face. This allows you to use Langfuse's observability tools right within the Hugging Face platform. \n", | ||

| "- **[Gradio example notebook](https://langfuse.com/docs/integrations/other/gradio)**: This example notebook shows you how to build an LLM Chat UI with Gradio and trace it with Langfuse\n", | ||

| "\n", | ||

| "## Feedback\n", | ||

| "\n", | ||

| "If you have any feedback or requests, please create a GitHub [Issue](https://langfuse.com/issue) or share your ideas with the community on [Discord](https://langfuse.com/discord)." | ||

| ] | ||

| }, | ||

| { | ||

| "cell_type": "markdown", | ||

| "metadata": {}, | ||

| "source": [] | ||

| } | ||

| ], | ||

| "metadata": { | ||

| "kernelspec": { | ||

| "display_name": ".venv", | ||

| "language": "python", | ||

| "name": "python3" | ||

| }, | ||

| "language_info": { | ||

| "codemirror_mode": { | ||

| "name": "ipython", | ||

| "version": 3 | ||

| }, | ||

| "file_extension": ".py", | ||

| "mimetype": "text/x-python", | ||

| "name": "python", | ||

| "nbconvert_exporter": "python", | ||

| "pygments_lexer": "ipython3", | ||

| "version": "3.13.1" | ||

| } | ||

| }, | ||

| "nbformat": 4, | ||

| "nbformat_minor": 2 | ||

| } |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.