-

Notifications

You must be signed in to change notification settings - Fork 52

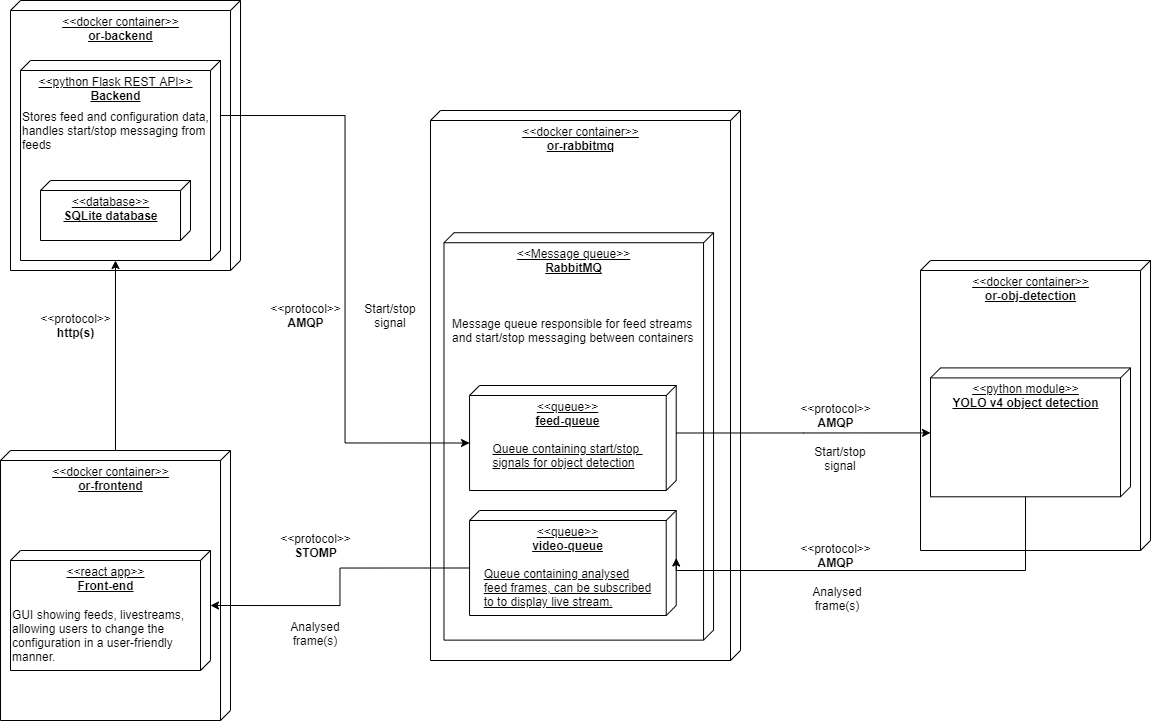

High Level Overview

Below is a simple high level overview of all the components that make up the or-objectdetection, every piece will get a small summary to explain the function behind the container.

The front-end is a small react application meant as a friendly GUI around the creation of and configuring of video feeds. Besides this the front-end also has a built-in editor for drawing detection lines, these lines are stored in the configuration and passed on to the object detection system. Of course, you are also able to view the analyzed frames of feeds on the front-end.

The back-end is responsible for storing all the data about video feeds (name, feed_url, etc) and communicating with the object detection on behalf of the front-end. It is a simple Flask REST API. The communication between the object detection and the backend is done using RabbitMQ as can be seen in the figure above, for this communication we use kombu.

The object detection container is responsible for, you guessed it, the object detection. The current iteration available in develop is a small worker which listens for start/stop signals and starts analyzing a feed when a signal is received. This only works when one feed is run at a time.

The video feed is pulled from the URL using a python wrapper around libVLC, which has support for most common input types, currently we support two types of feeds:

- YouTube live feeds

- IP cams

We have worked on a proper asynchronous thread manager which can spawn multiple asynchronous video feed analysis threads to process multiple feeds at once. However, this requires a change to the way RabbitMQ handles messaging, so this is not implemented in the current iteration. However, would be a welcome improvement

RabbitMQ is the message queue we have chosen for cross container asynchronous communication, the message queue is responsible for handling the start/stop signals between the back-end and object detection, besides this RabbitMQ also receives analyzed frames from the object detection in a queue, this queue can be subscribed to by any consumer of choice. However, in the default application the analyzed frames queue is consumed by the front end using the STOMP Plugin to display live video feeds to the user in the browser.